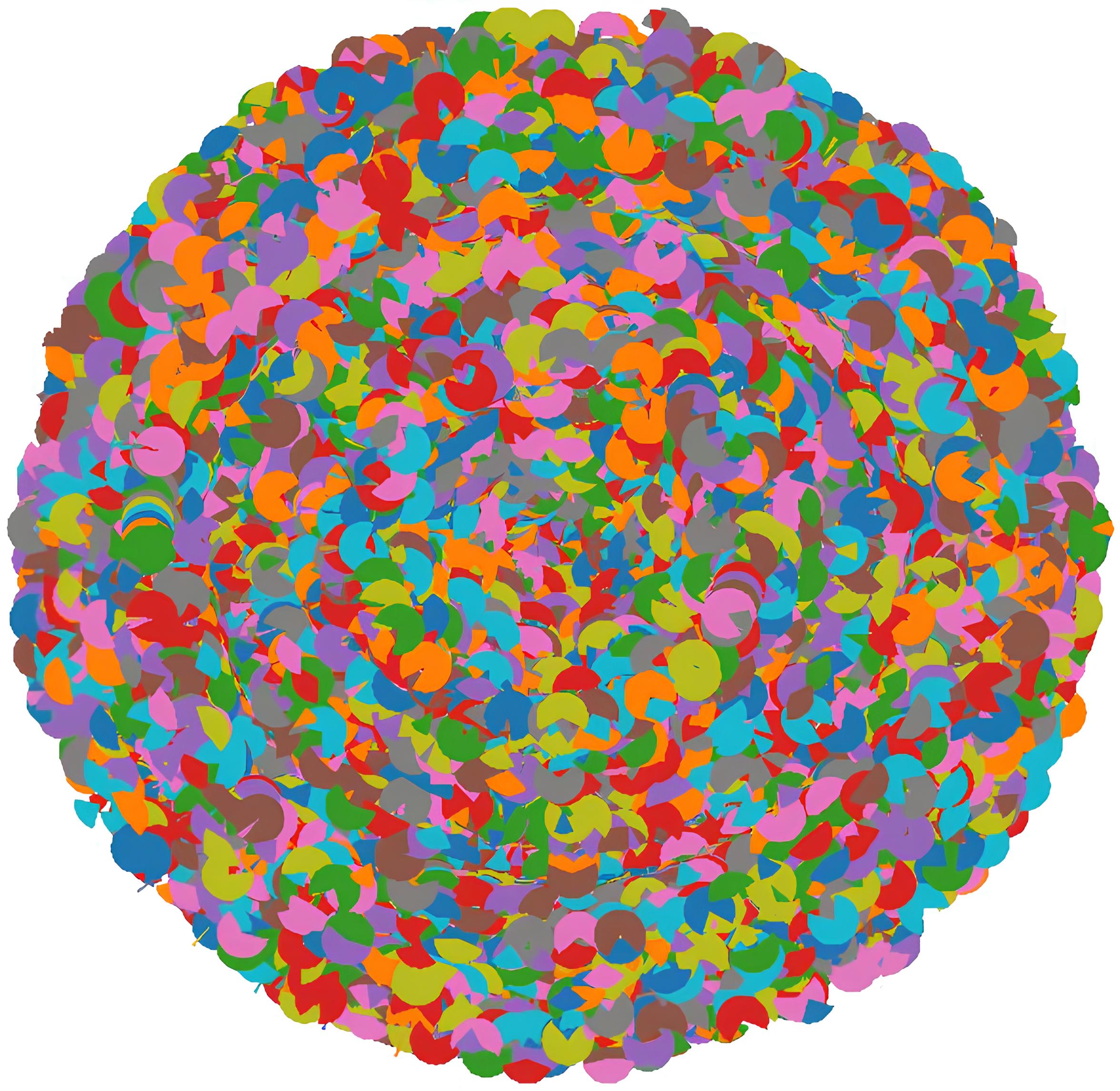

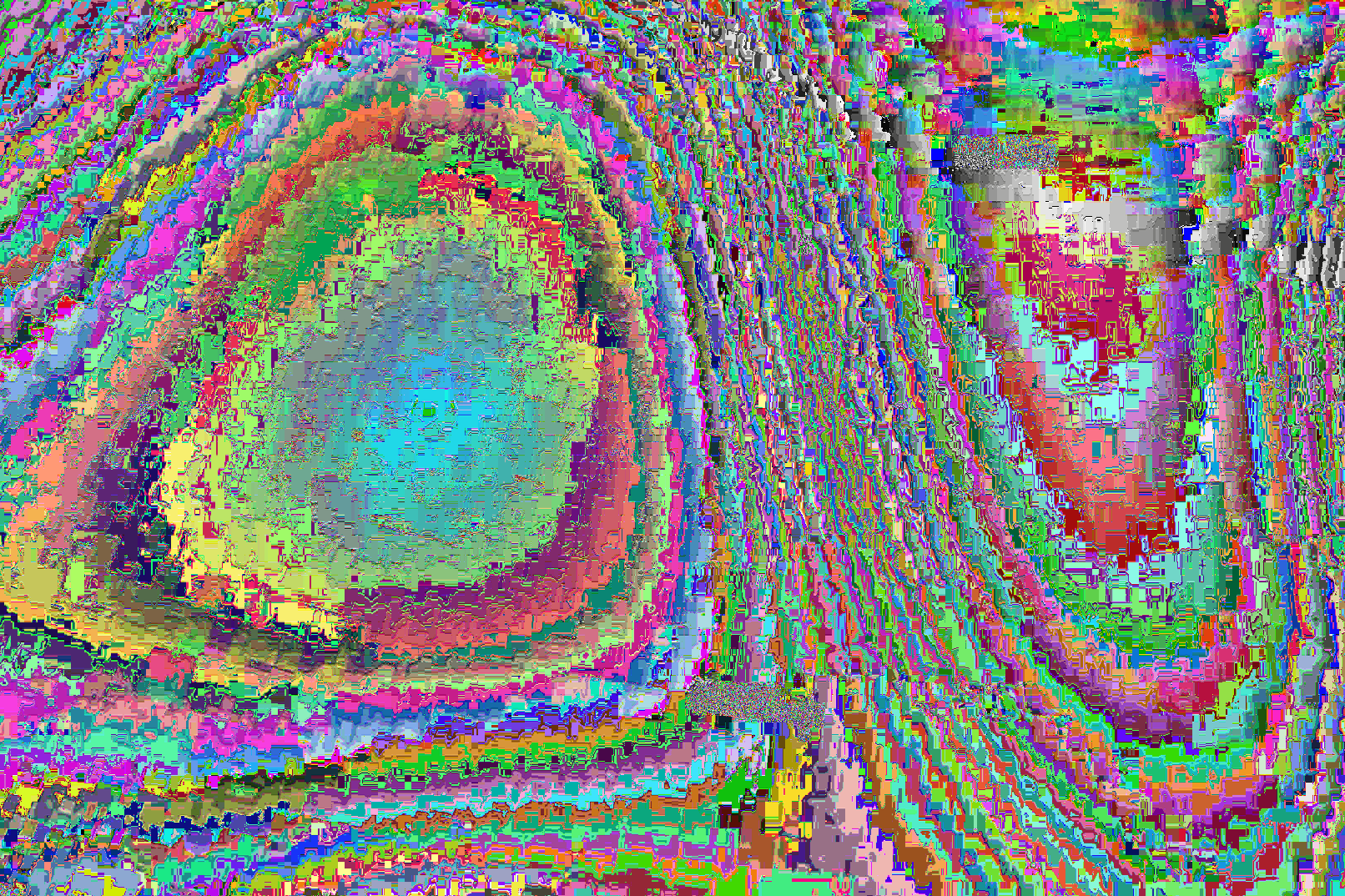

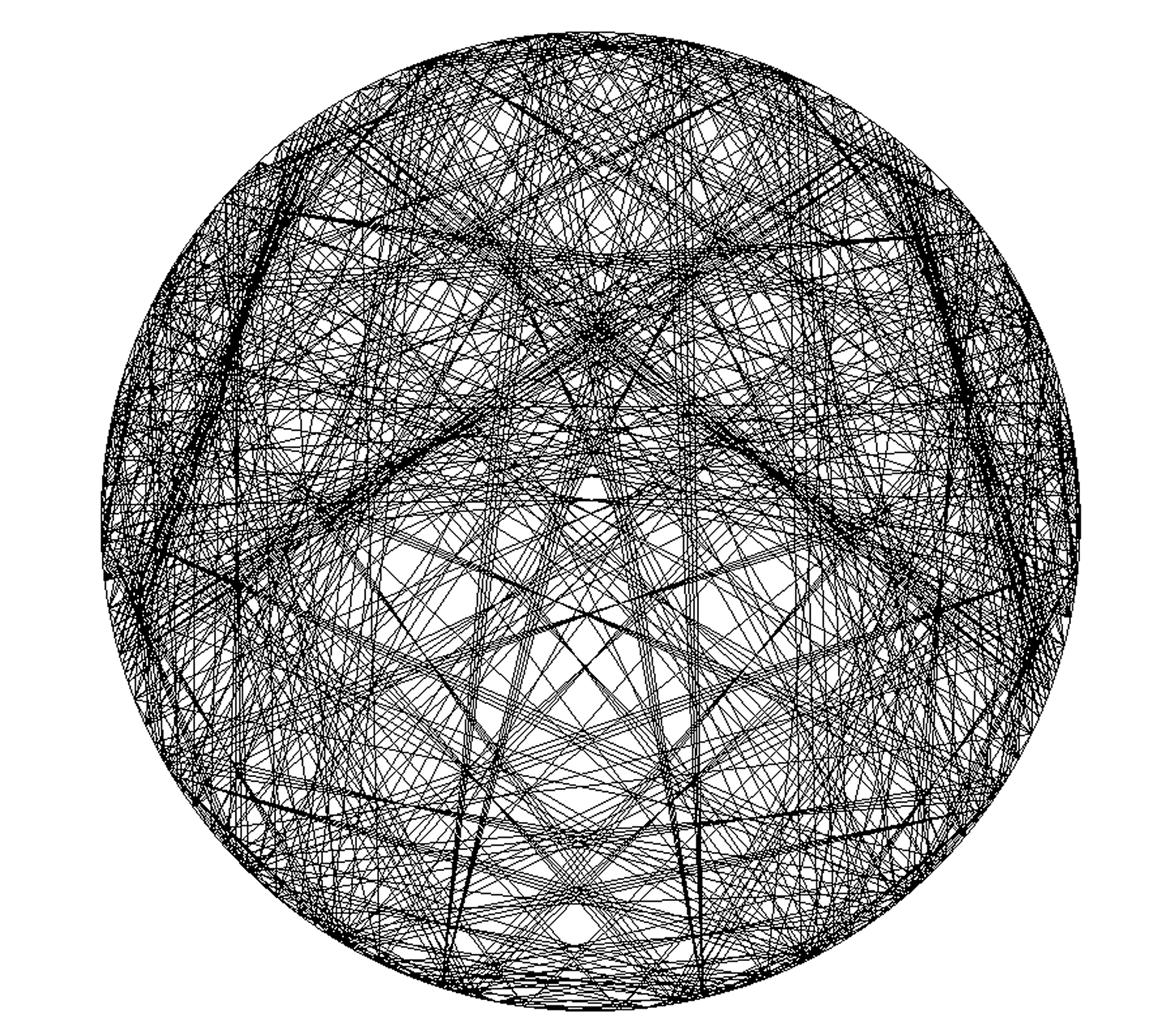

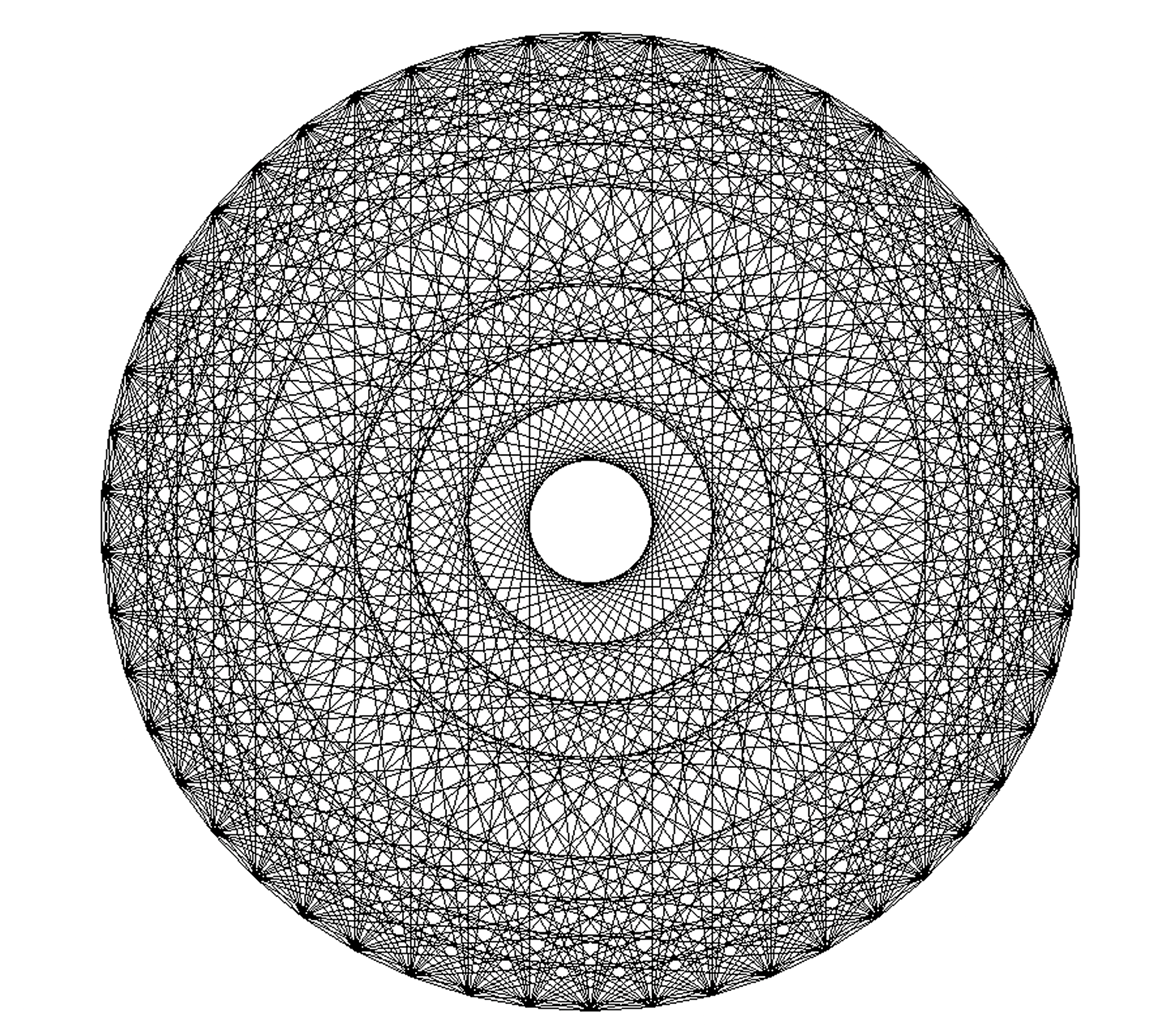

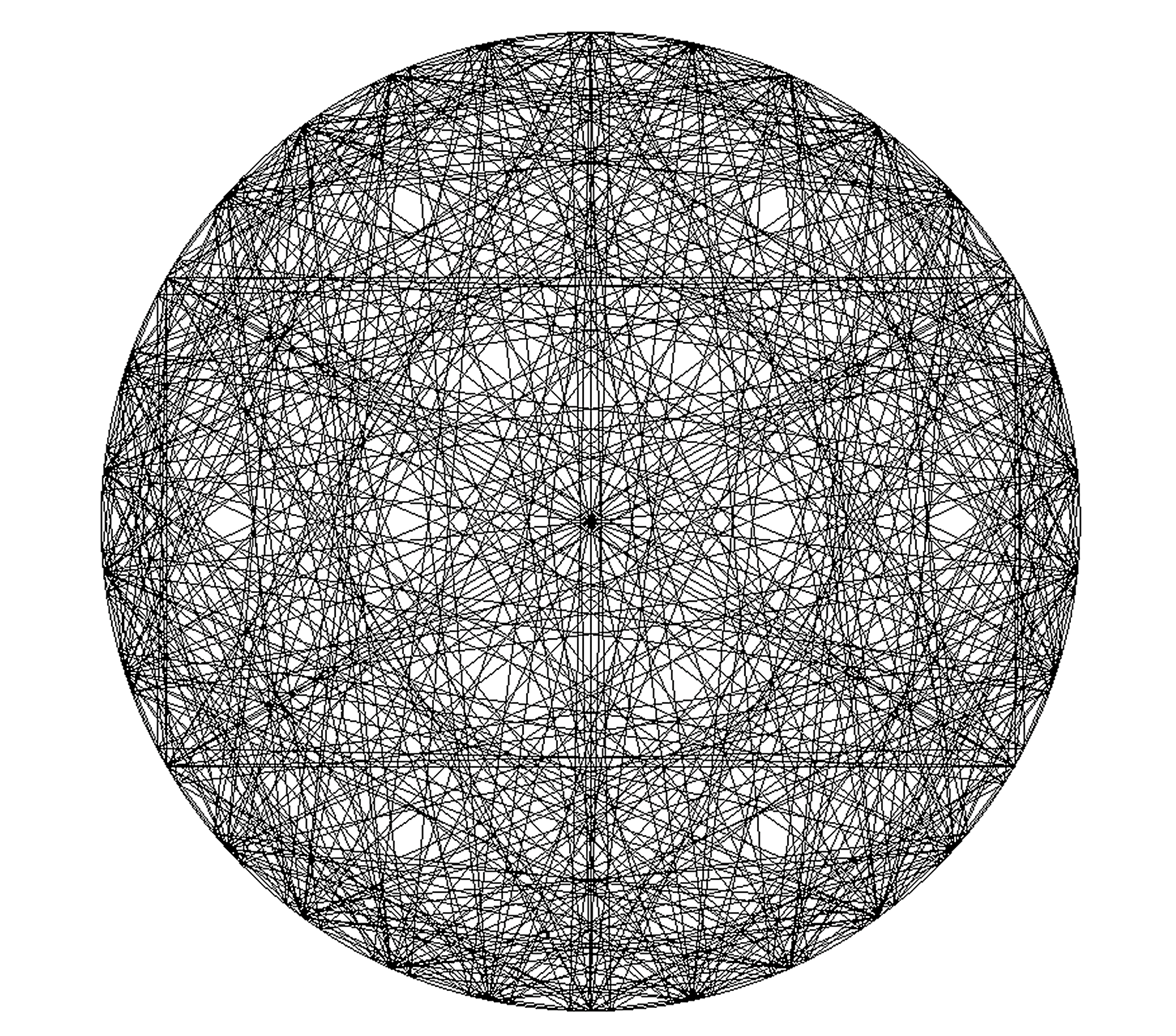

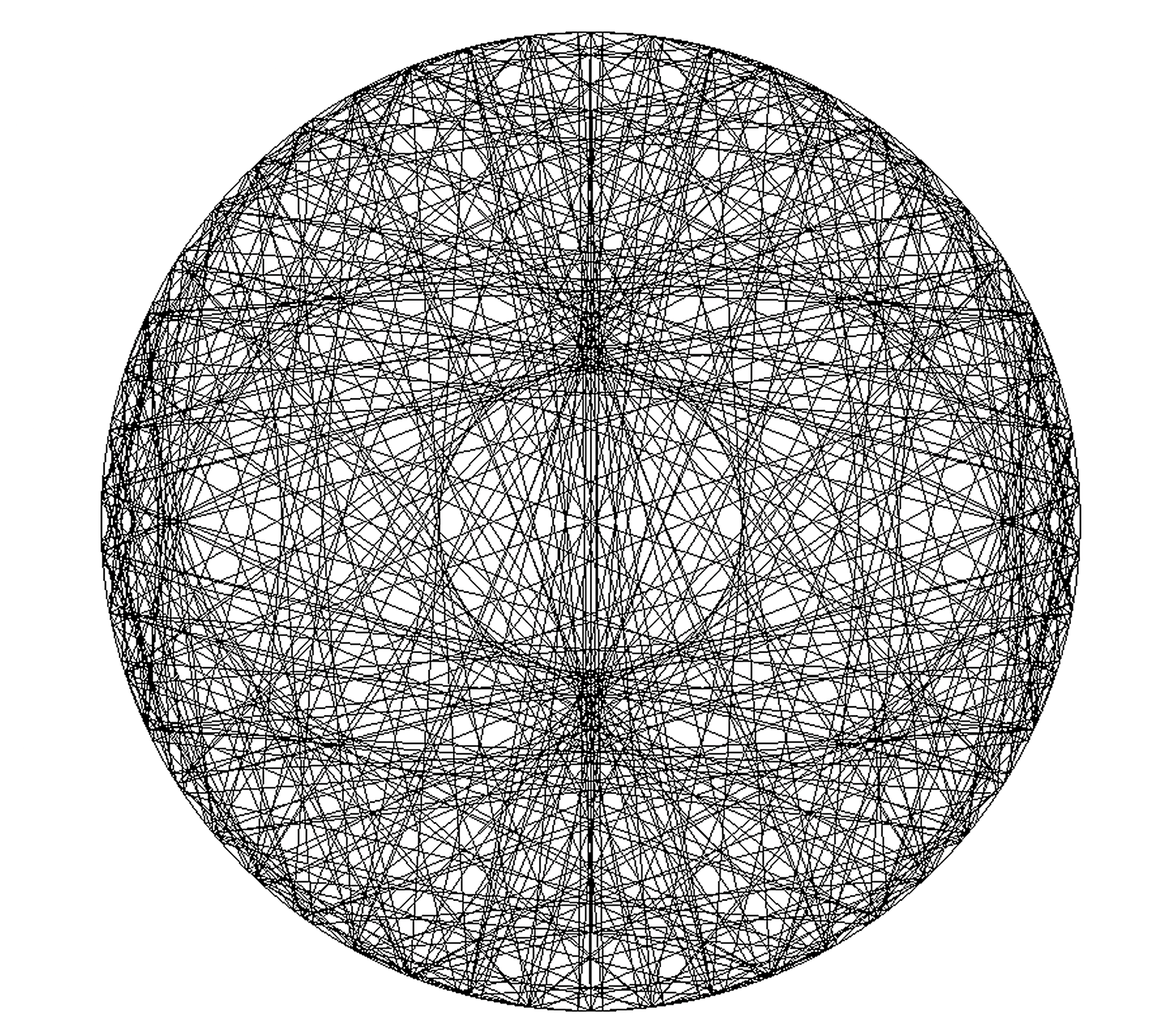

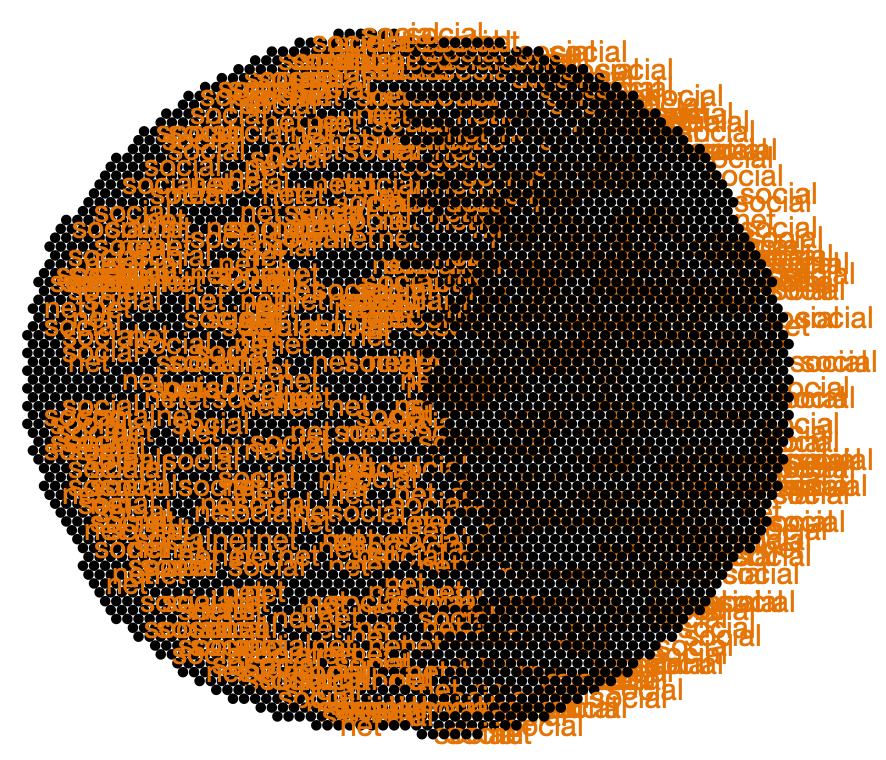

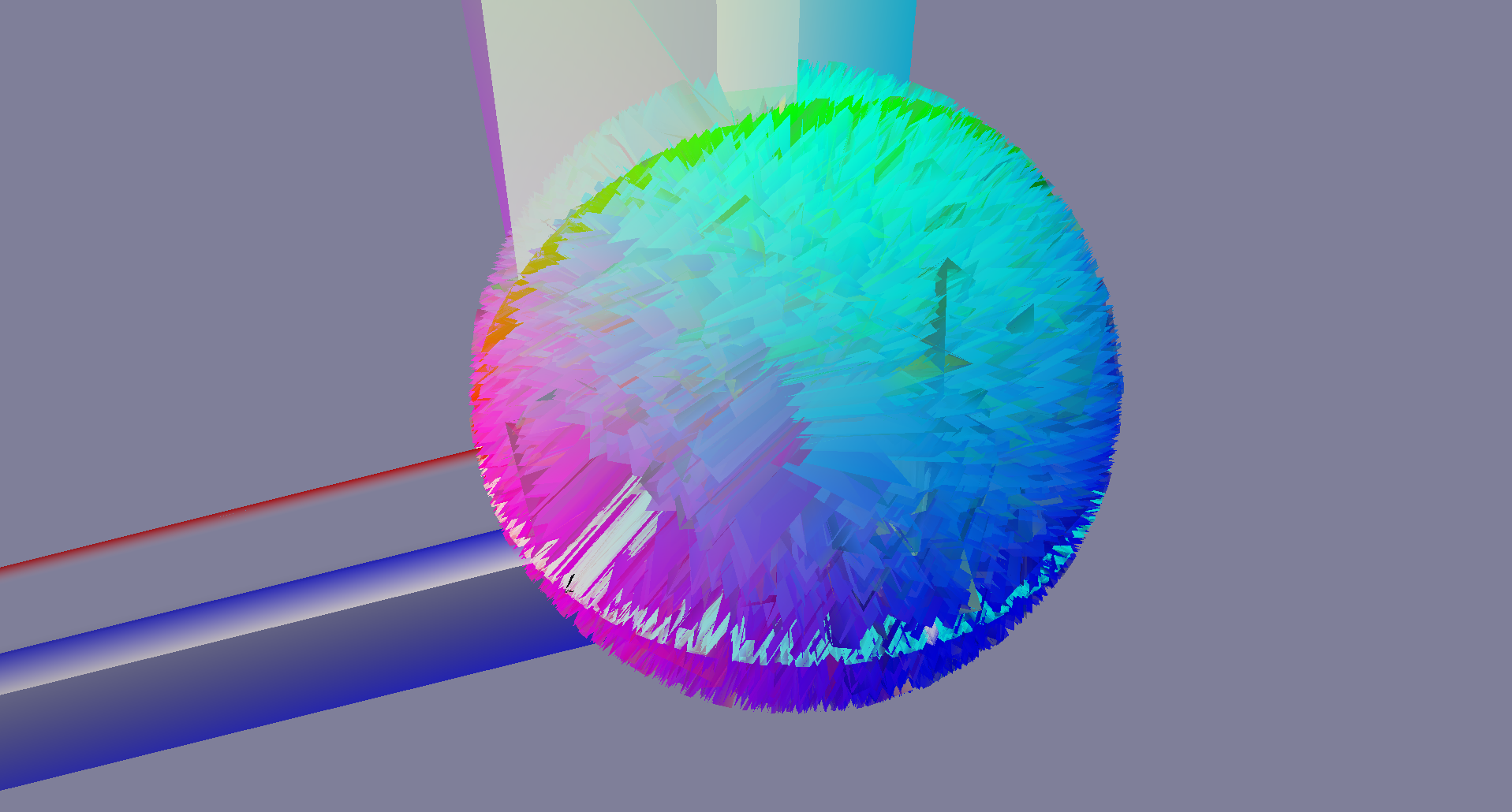

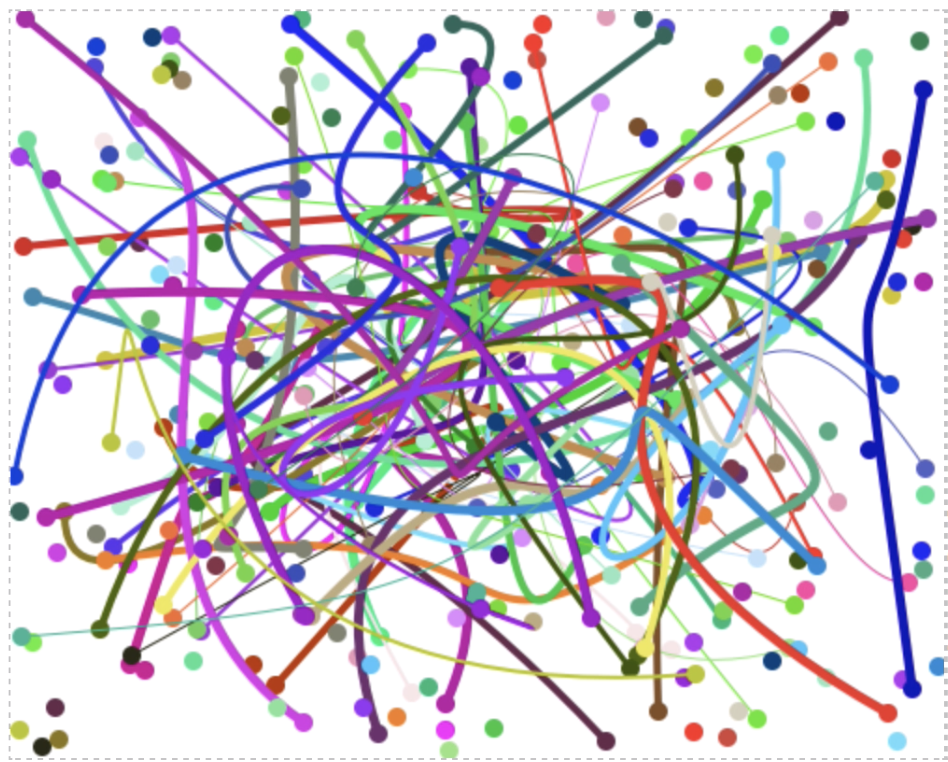

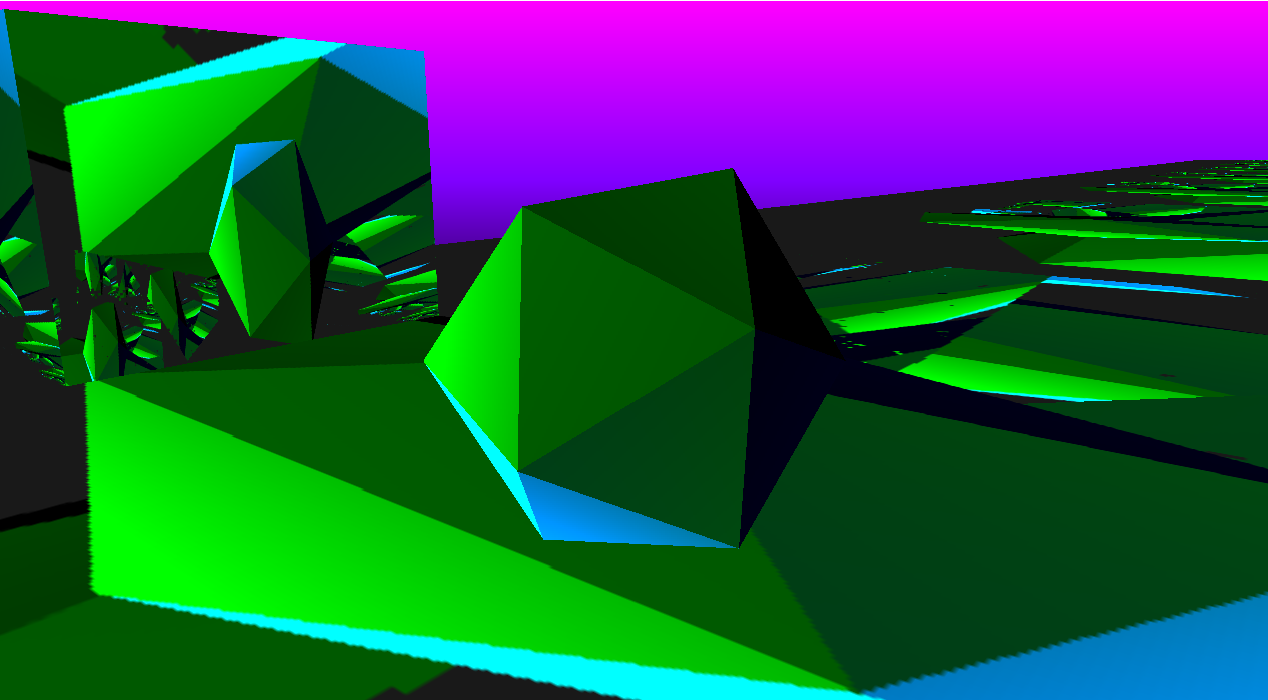

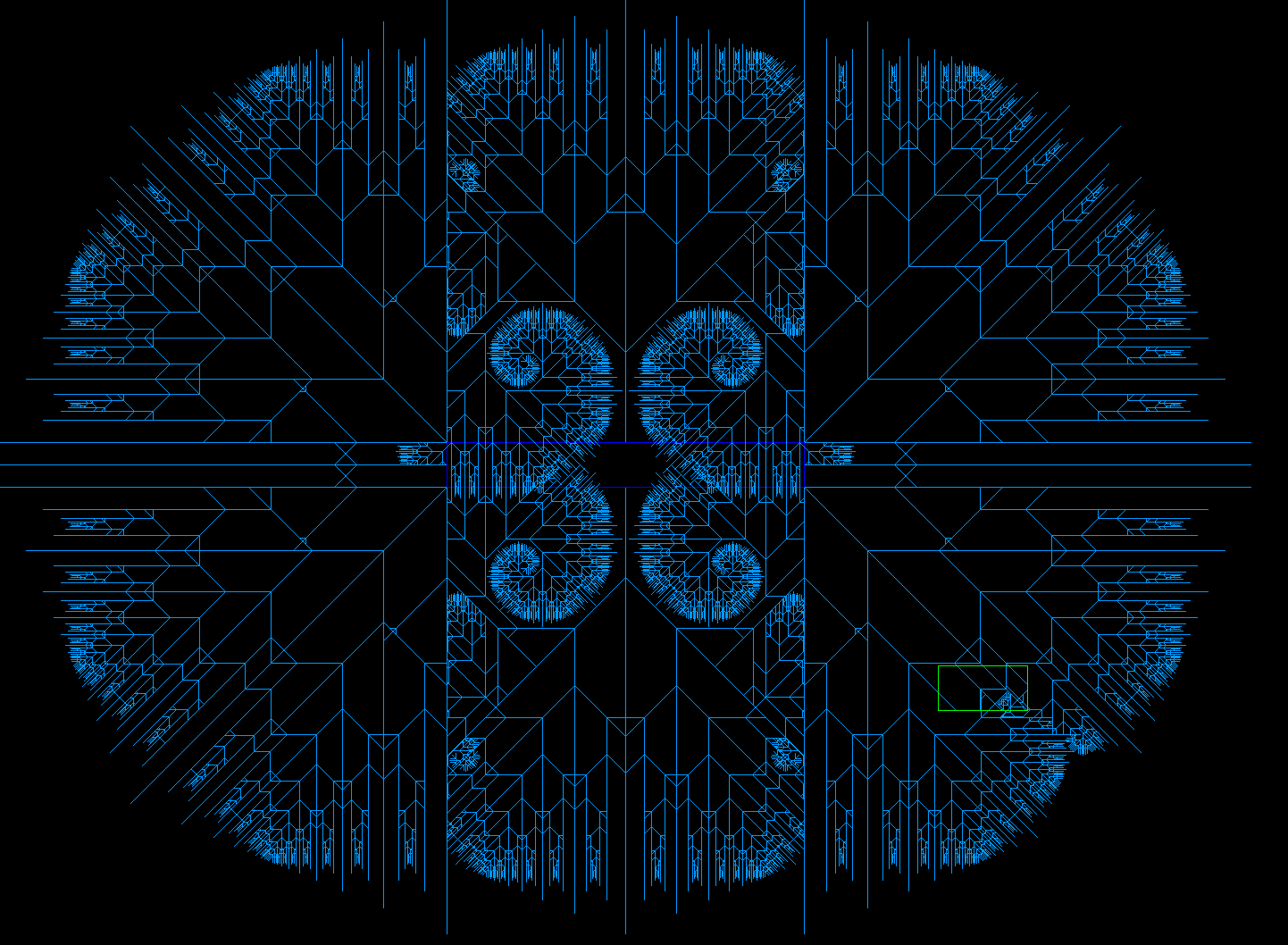

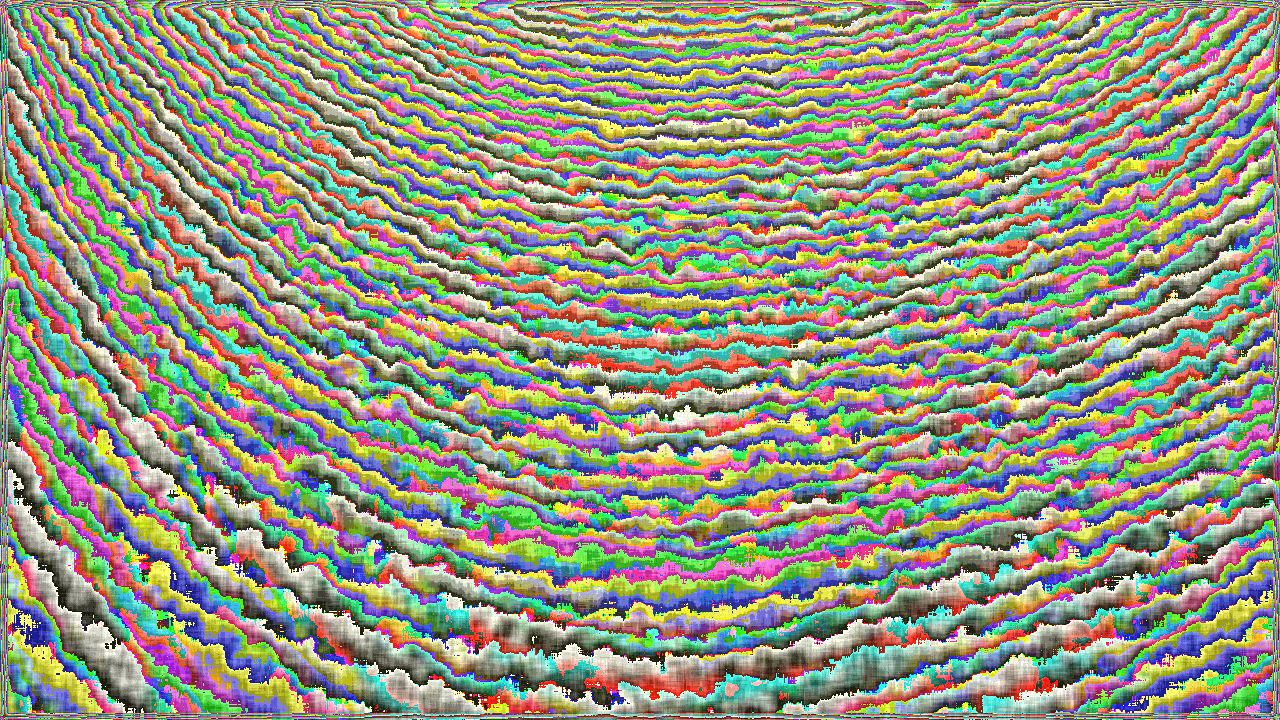

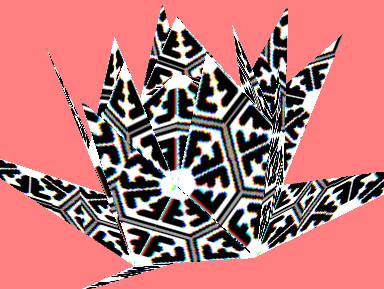

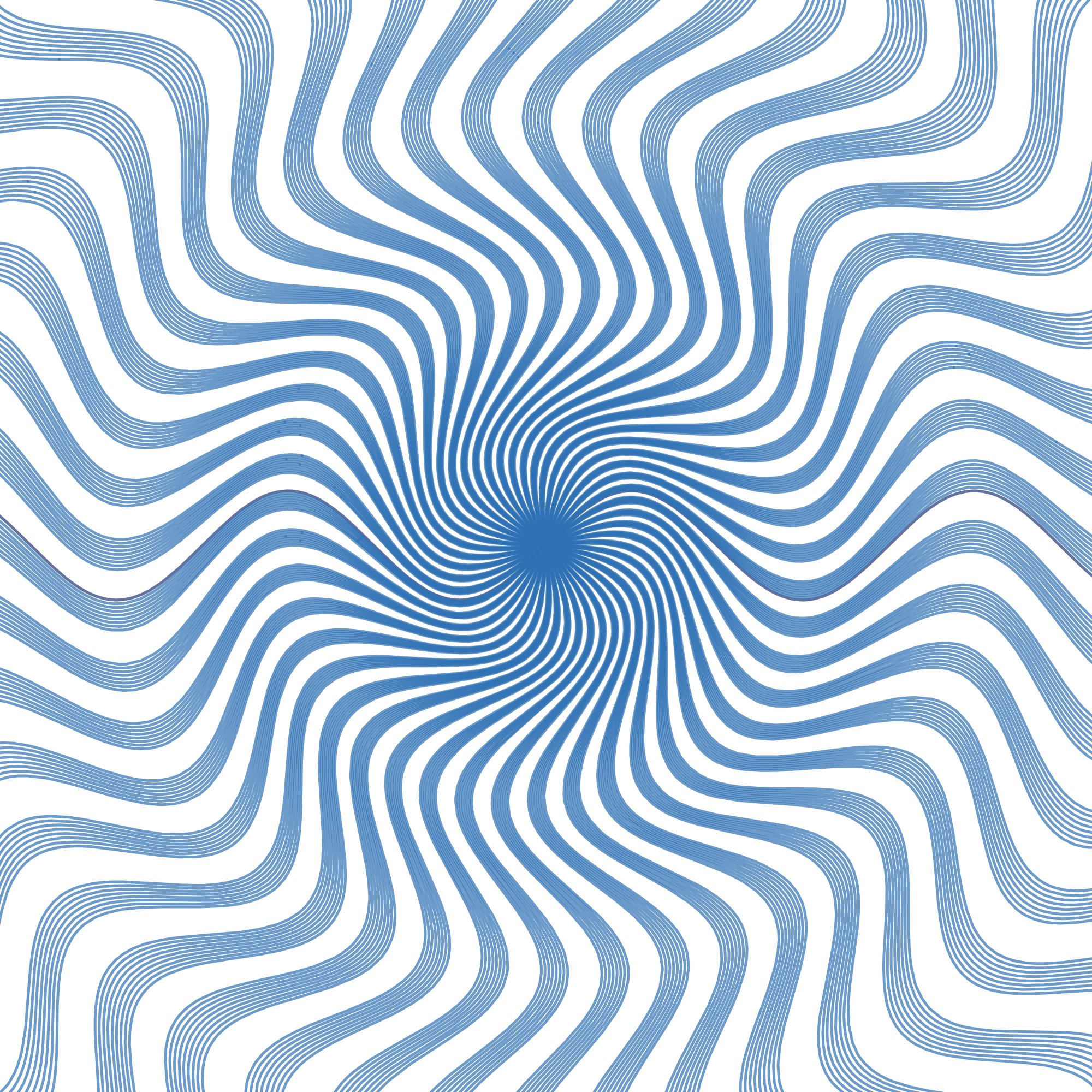

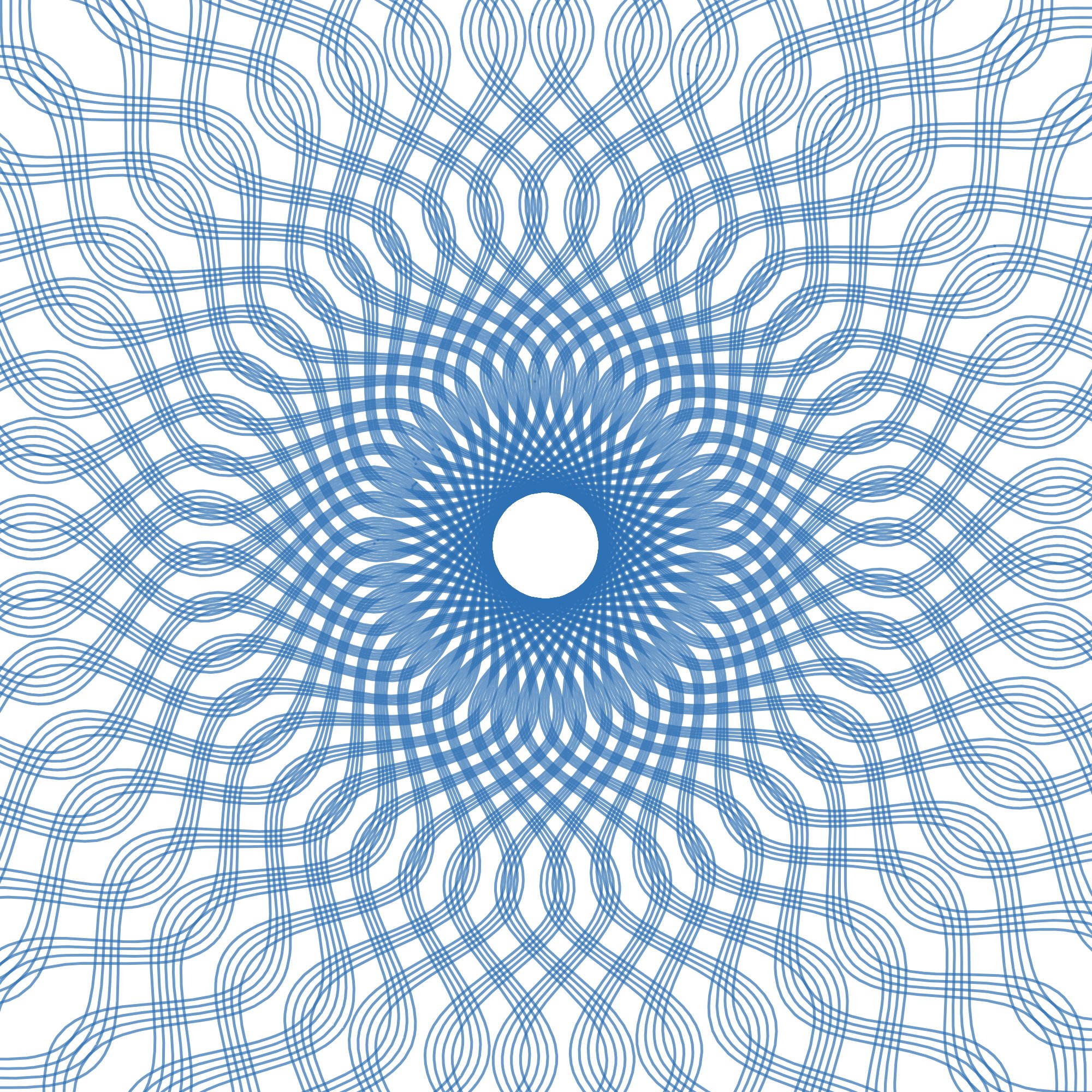

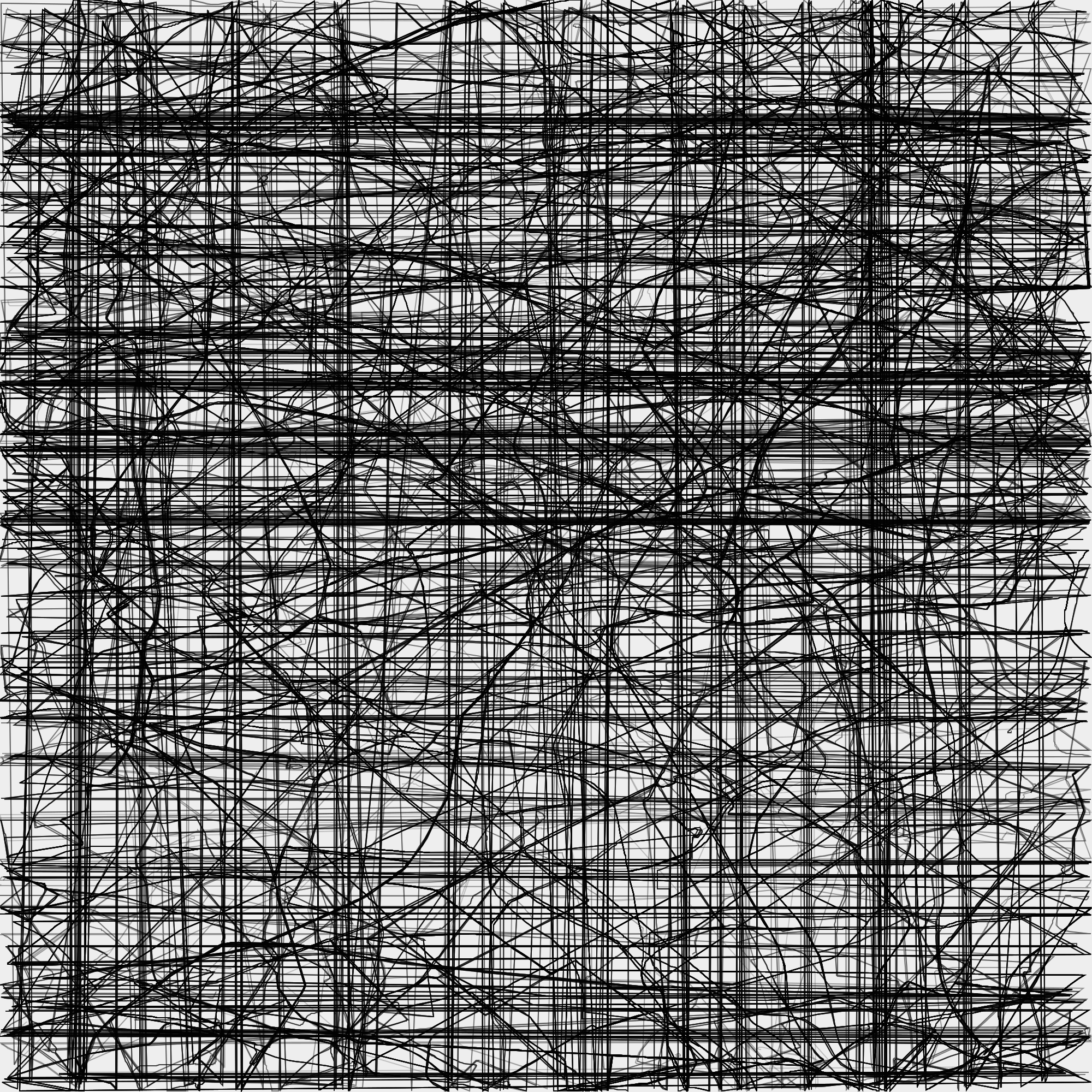

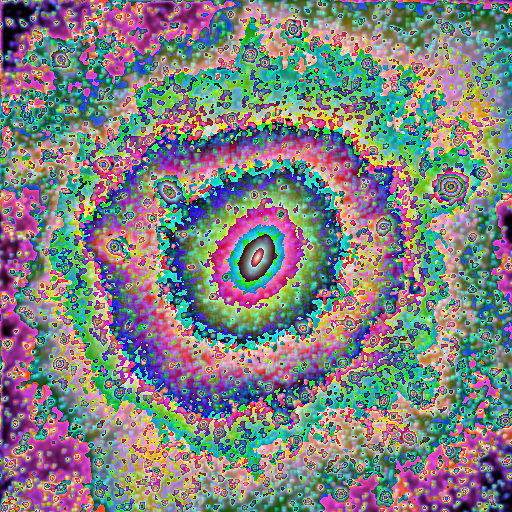

After creating an inner and an outer circle, Inkscapes Interpolate Between Paths extension applied not just the rest of the content but also itself for the position of the actual creator of this work.

The exact parameters of the process may have been lost to time, evidence of the authors inability to count to 27, however, has not.

]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]>

]]>

]]> ]]>

]]>

]]>

]]>

]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]>

]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]>

]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]>

]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]>

]]>

]]> ]]>

]]> ]]>

]]>

]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]> ]]>

]]>

]]>

]]> ]]>

]]>